Microsoft has unveiled a new AI model called Phi-2, which demonstrates the remarkable ability to match or even exceed the performance of larger, more established models up to 25 times its size.

The Phi-2 is a 2.7 billion-parameter language model that demonstrates “state-of-the-art performance” compared to other basic models on complex standardized tests that measure reasoning, language understanding, mathematics, and skills, Microsoft announced in a blog post today. Coding and common sense abilities. Phi-2 is now being released via the Microsoft Azure AI Studio model catalog, which means it is now available to researchers and developers looking to integrate it into third-party applications.

Phi-2, which Microsoft CEO Satya Nadella (pictured) first revealed at Ignite in November, is incredibly powerful thanks to being trained on what the company said is “textbook-quality” data that specifically focuses on knowledge. In addition to techniques that allow the transfer of ideas learned from alternative models.

What’s interesting about Phi-2 is that the versatility of large language models has always been closely related to their overall size, which is measured in parameters. Those with larger parameters usually show more abilities, but this changes with Phi-2.

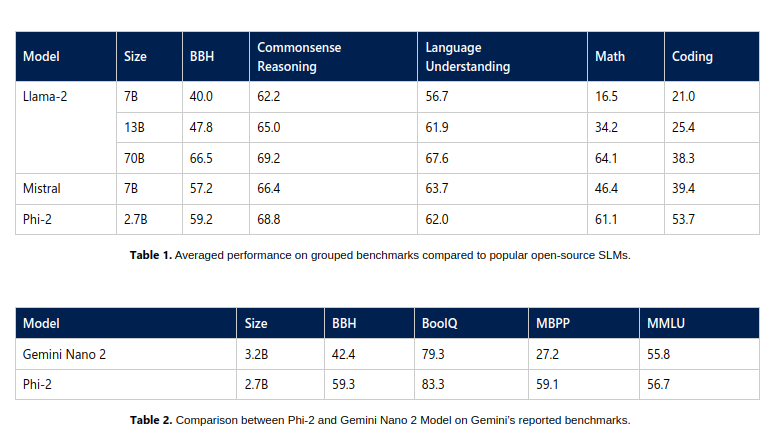

According to Microsoft, the Phi-2 has demonstrated the ability to match or even exceed the capabilities of much larger prototypes, including the 7B Mistral from Mistral AI, the 13B Llama 2 from Meta Platforms Inc, and even the 70B Llama-2 in certain benchmarks.

Perhaps the most surprising claim is that it could outperform Google LLC’s Gemini Nano, the most efficient of the Gemini series of LLMs announced last week. Gemini Nano is designed for on-device tasks and can run on smartphones to enable features such as text summarization, advanced proofreading, grammar correction, and contextual smart replies.

Microsoft researchers said the tests including the Phi-2 were broad, covering language understanding, reasoning, mathematics, programming challenges, and more.

According to the company, the Phi-2’s performance is due to its training on carefully curated, textbook-quality data that is geared toward teaching logic, knowledge, and common sense, meaning it can learn more from less information. Microsoft researchers have also applied techniques that allow knowledge to be embedded from smaller models.

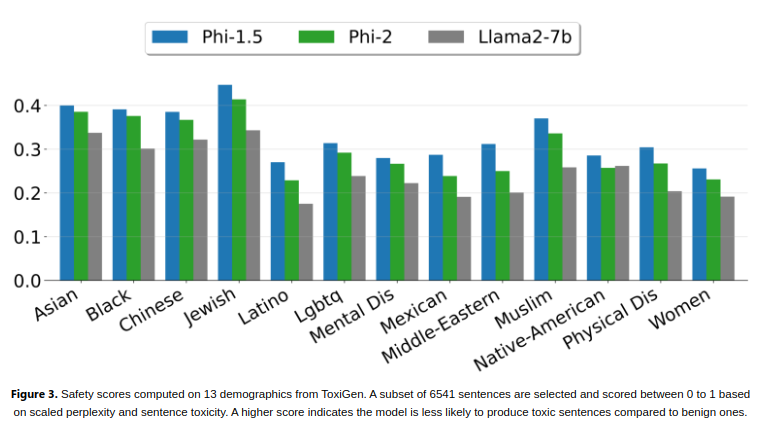

Remarkably, Phi-2 can achieve its strong performance without using techniques such as reinforcement learning based on human feedback or fine-tuning learning, which are often used to improve the behavior of artificial intelligence models, the researchers said. Despite not using these techniques, Phi-2 was able to demonstrate superior performance in terms of bias mitigation and toxicity, compared to other open source models that use it. The company believes this is the result of its custom data organization.

Phi-2 is the latest in a series of what Microsoft researchers call “small language models,” or SLMs. The first, Phi-1, debuted earlier this year with 1.3 billion parameters, having been fine-tuned for basic Python coding tasks. In September, the company launched Phi-1.5, with 1.3 billion parameters, trained on new data sources that included various synthetic texts generated using natural language programming.

Microsoft said the efficiency of Phi-2 makes it an ideal platform for researchers who want to explore areas such as enhancing the safety of artificial intelligence, interpretability, and ethical development of language models.

Images: Microsoft

Your upvote is important to us and helps us keep the content free.

One click below supports our mission of providing free, deep, relevant content.

Join our community on YouTube

Join a community of over 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies Founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more notable figures and experts.

Thank you

Microsoft has unveiled its latest language model, the Phi-2, which boasts an impressive 2.7 billion parameters. This new model has caused a stir in the tech world as it outperforms many larger language models, showcasing Microsoft’s commitment to pushing the boundaries of natural language processing technology. With its advanced capabilities and superior performance, the Phi-2 is set to revolutionize the field of language modeling and solidify Microsoft’s position as a leader in the industry.