Big language models like OpenAI’s GPT-4 and Google’s PaLM 2 have dominated the news cycle over the past few months. While we all thought the world of AI would return to its usual slow pace, that has not happened yet. Case in point: The company spent nearly an hour talking about artificial intelligence at its recent event. So, it goes without saying that Google’s next-generation AI architecture, dubbed Gemini, deserves some attention.

Gemini can create and process text, images, and other types of data such as graphs and maps. That’s right, the future of AI is not limited to chatbots or image generators. Although these tools may seem impressive today, Google believes that they are far from maximizing the full potential of the technology. So, in this article, let’s break down what the search giant aims to achieve with Gemini, how it works, and why it points to the future of artificial intelligence.

What is Google Gemini? Beyond the simple language model

Gemini is Google’s next-generation AI architecture that replaces PaLM 2. Currently, the latter powers several of the company’s AI services, including the Bard chatbot and Duet AI in Workspace apps like Google Docs. Simply put, Gemini will allow these services to analyze or generate text, images, audio, videos, and other types of data simultaneously.

Thanks to ChatGPT and Bing Chat, you’re probably familiar with machine learning models that can understand and generate natural language. It’s the same story with AI image generators, with a single line of text, they can create beautiful works of art or even photo-realistic images. But Google’s Gemini will go one step further because it’s not tied to a single data type — which is why you might hear it called a “multi-modal” model.

A multimodal model can decode many types of data simultaneously, similar to how humans use different senses in the real world.

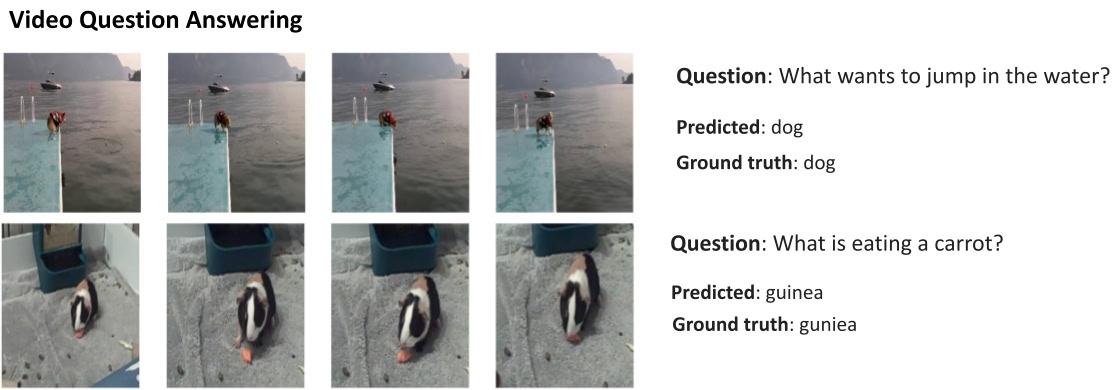

Here’s an example demonstrating the amazing capabilities of a multimodal model, courtesy of the Google AI Research blog. It shows how AI can not only extract features from a video to create a summary, but can also answer follow-up text questions. The company says that Gemini can handle multiple methods simultaneously, without being limited to just one.

Gemini’s ability to combine visual and textual elements should also allow him to generate more than one type of data at the same time. Imagine that AI can not only write the contents of the magazine, but can also design its layout and graphics. Or AI that can summarize an entire newspaper or podcast based on the topics you care about most.

Gemini vs. GPT-4: How do they compare?

Calvin Wankhede/Android Body

Gemini differs from other large language models in that it is not trained on text alone. Google says it built the model with multimedia capabilities in mind. This is similar to GPT-4, the reigning champion in the language modeling world, which can handle different modalities. However, the table in Google’s technical report (pictured below) indicates that Gemini performs better in real-world tests.

So how does a multi-modal AI like Google Gemini work? You have a few key components working in unison, starting with the encoder and decoder. When you provide input with more than one data type (such as a piece of text and an image), the encoder extracts all relevant details from each data type (modality) separately.

The AI then looks for important features or patterns in the extracted data using an attention mechanism, essentially forcing it to focus on a specific task. For example, identifying the animal in the example above might involve looking only at specific areas of the image with a moving subject. Finally, AI can combine information it learns from different types of data to make a prediction.

Gemini Release Date: Access a Google product near you

With Gemini, Google hopes to match or surpass GPT-4, before falling behind forever. After initially talking about the model in May 2023, the search giant released Gemini on December 6, 2023.

Google confirmed that the Gemini device will come in three different sizes, namely Nano, Pro, and Ultra. The smallest one, the Gemini Nano, is well suited for generative AI on the go and will be coming to Android devices starting with the Pixel 8 Pro. Meanwhile, the Gemini Pro model will come to more Google services like Gmail and Docs in 2024.

Right now, the easiest way to experience Gemini’s abilities is via the Bard chatbot. According to Google, the December 2023 Bard update is the largest yet and integrates Gemini Pro with “advanced reasoning, planning, understanding, and more.” We’re also awaiting the launch of Assistant with Bard, which should enable back-and-forth verbal conversations with Gemini for the first time.

common questions

Yes, Google claims that their Gemini model is better than the free version of ChatGPT. The larger Gemini model is also very competitive with GPT-4, but is currently out of reach.

As technology continues to advance, the next generation language model has finally arrived with the capability to do it all. This revolutionary new model has the ability to understand and generate human language like never before, making it a powerful tool for a wide range of applications. Whether it’s writing compelling narratives, composing complex code, or translating languages with unprecedented accuracy, this next generation language model is poised to change the way we interact with and utilize language in the digital age. With its advanced capabilities and potential for real-world impact, this new model is set to redefine what is possible with language processing technology.